Section: New Results

Partnerships

Participants : Wendy Mackay, Jessalyn Alvina, Ghita Jalal, Joseph Malloch, Nolwenn Maudet.

ExSitu is interested in designing effective human-computer partnerships, in which expert users control their interaction with technology. Rather than treating the human users as the 'input' to a computer algortithm, we explore human-centered machine learning, where the goal is to use machine learning and other techniques to increase human capabilities. Much of human-computer interaction research focuses on measuring and improving productivity: our specific goal is to create what we call 'co-adaptive sytstems' that are discoverable, appropriable and expressive for the user. Interactive program restructuring [28] offers a concrete example, where expert programmers interact with dynamic visualisations of parallel programs to better understand and organize their code. Similarly, tools such as Color Partner generate color suggestions based the users input, helping the user guide their discovery of new color possibilities, and Linkify helps users create rules to define how visual properties should change under different user contexts (see Jalal's dissertation).

We hosted the 30-person ERC CREATIV workshop in Paris, to explore our concepts of Co-adaptive Systems (including human-centered machine learning); and Instrumental Interaction (including substrates) with prominent researchers from Stanford University, New York University, University of Aarhus, Goldsmiths College, University of Toulouse, IRCAM, University of British Columbia, UC San Diego, and UC Berkeley. Our long-term, admittedly ambitious, goal is to create a unified theory of interaction grounded in how people interact with the world. Our principles of co-adaptive systems and instrumental interaction offer a generative approach for supporting creative activities, from early exploration to implementation. The workshop launched several research projects that are currently in progress or will be published in 2017.

Human-Centred Machine Learning:

We begin by challenging some of the standard assumptions surrounding Machine Learning, clearly one of the most important and successful techniques in contemporary computer science. It involves the statistical inference of models (such as classifiers) from data. However, all too often, the focus is on impersonal algorithms that work autonomously on passively collected data, rather than on dynamic algorithms that progressively reveal their progress to support human users. We collaborated on a workshop at the CHI 2017 conference, entitled "Human-centred Machine Learning" [15] with colleagues from Ircam, Goldsmiths College, and Microsoft Research. We seek a different understanding of the 'human-in-the-loop', where the focus is less on the human user as input to an algorithm, but rather as an algorithm in service of a human user. Examining machine learning from a human-centred perspective includes explicitly recognising human work in the creation of these algorithms, as well as the situated use these algorithms by human work practices. A human-centred understanding of machine learning in human context can lead not only to more usable machine learning tools, but to new ways of framing learning computationally.

Supporting Expressivity:

We helped organize and participated in a workshop at CHI 2017 Human Computer Interaction meets Computer Music [27], where we described the results of the MIDWAY Equipe Associé project (with McGill University, Ex-Situ and the MINT EP at Inria, Lille.) We presented results of our extensive research with contemporary music composers, in particular our strategy for developing 'co-adaptive instruments'. This involves a paradigm shift, where the goal of the technology is not necessarily the accuracy of a particular result, but rather, the human user's ability to express themselves through the technology.

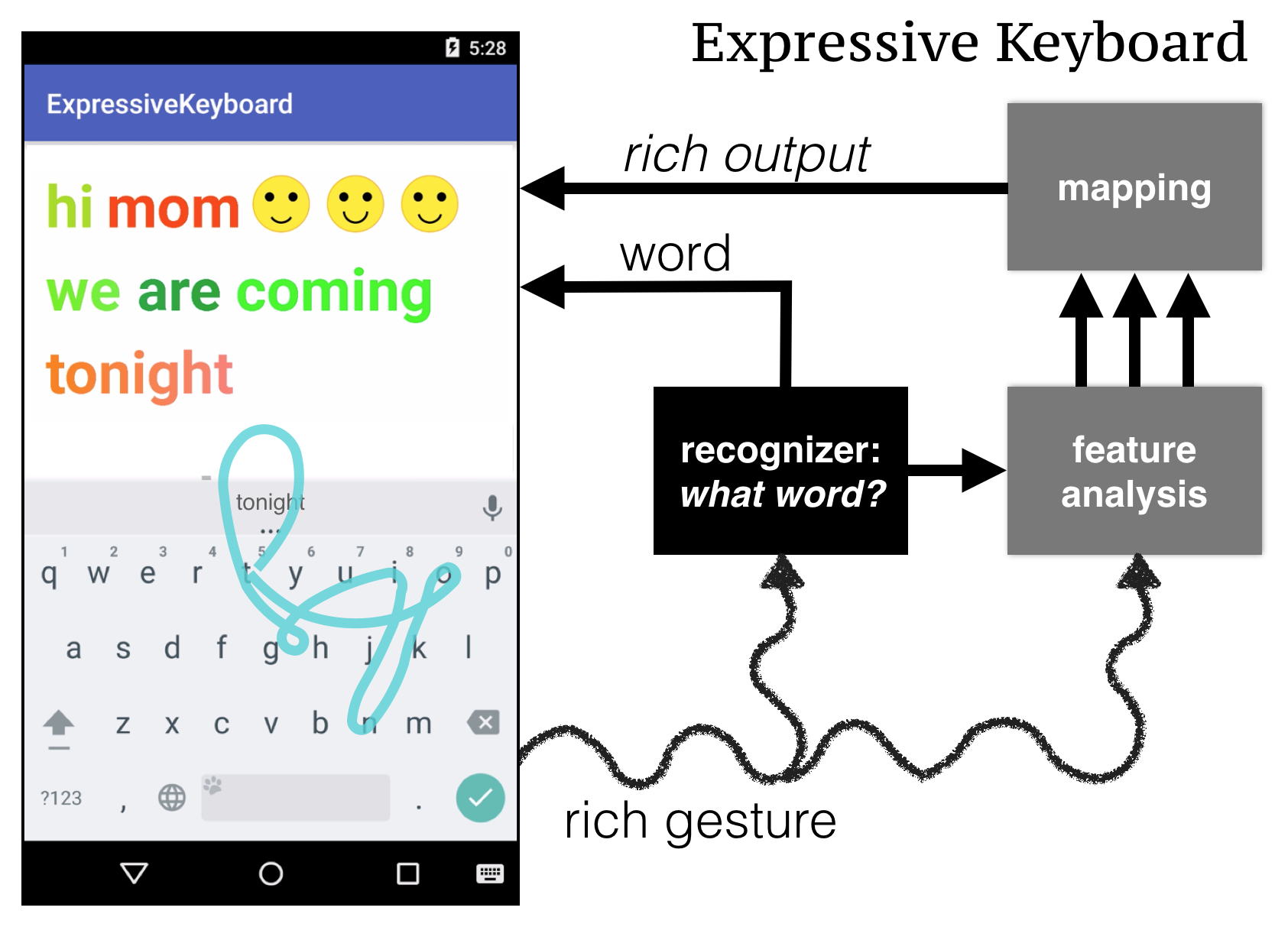

We also explored the idea of rethinking the use of machine learning to support human-computer partnerships for everyday interaction. We built on gesture-typing, which offers users an efficient, easy-to-learn, and error-tolerant technique for producing typed text on a soft keyboard. Our focus was not on improving recognition accuracy, which we take as a given, but rather on how to make gesture-typed output more expressive. Experiment 1 demonstrated that users vary word gestures according to instructions (accurately, quickly or creatively) as well as specific characteristics of each word, including length, angle, and letter repetition. We show that users produce highly divergent gestures, with three easily detectable characteristics: curviness, size, and speed. We created the Expressive Keyboard [10] which maps these characteristics to color variations, thus allowing users to control both the content and the color of gesture-typed words (Figure 4). Experiment 2 demonstrates that users can successfully control their gestures to produce the desired colored output, and find it easier to react to visual feedback than explicitly controlling the characteristics of each gesture. Expressive keyboards can map gestural input to any of a variety of output characteristics, such as personalized handwriting and dynamic emoticons, to let users transform gesture variation into expressivity, without sacrificing accuracy.